Can an autoregressive transformer learn how to solve the Knight's tour?

October 2024 - January 2025

Independent

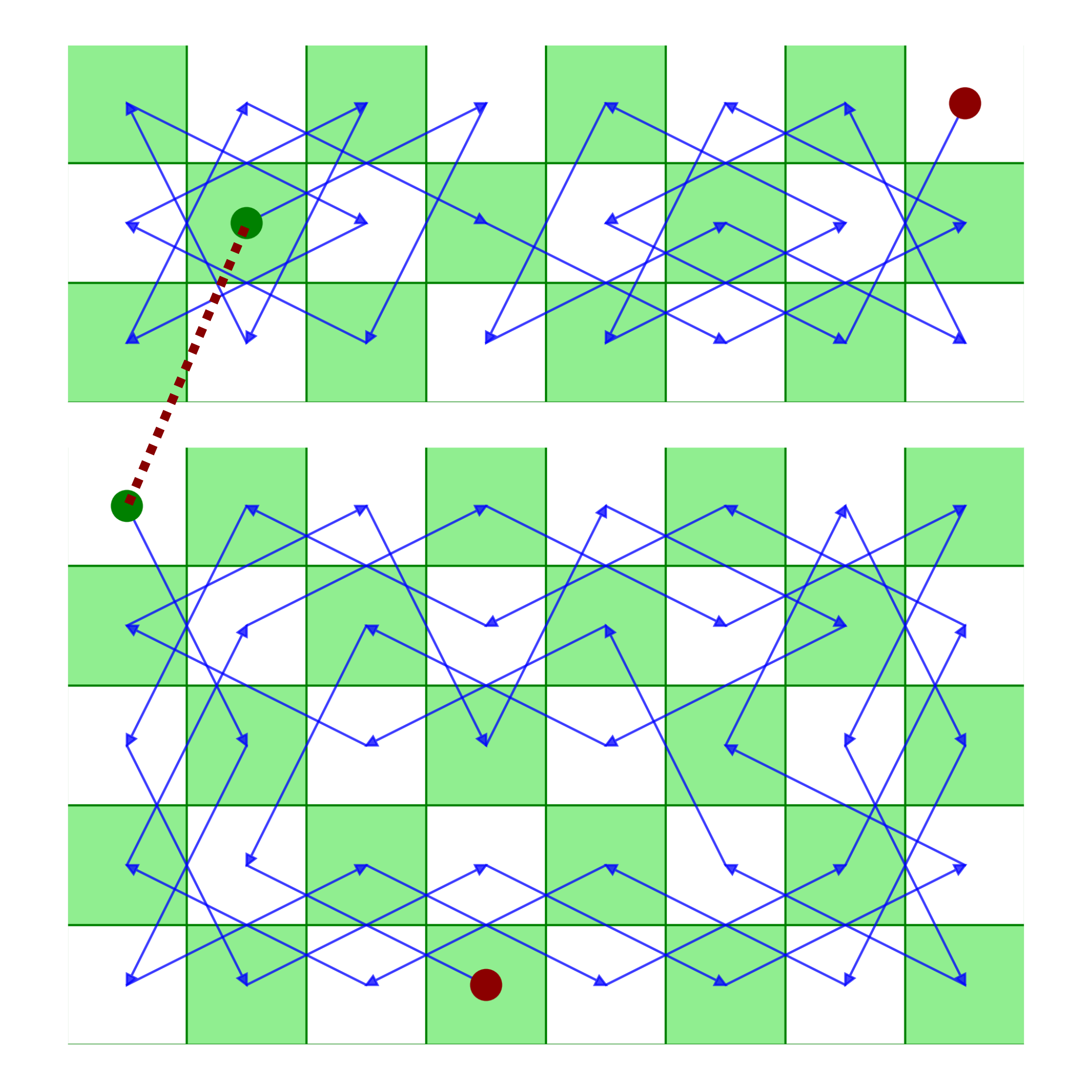

Summary: I trained a 57 million parameter verison of GPT-2 on tens of millions of knight's tour puzzles encoded as linear indices and evaluated whether the model could solve unseen "parberry" puzzles.

Extended Description: "Can large language models generalize?" remains an open question amongst language modeling and artificial intelligence researchers. Investigating this question is quite challenging using LLMs trained on internet corpora.

First, there are methodological debates and uncertainty surrounding the tests of generality. Second, generating out-of-distribution examples is difficult; the internet is vast, and it is hard to verify if evaluations with supposedly out-of-distribution examples are actually out-of-distribution. Third, even if truly novel, out-of-distribution examples can be crafted, interpretability methods are not advanced enough to determine whether the model solved the question using genuine reasoning or mere interpolation / pattern recognition.

Games and puzzles offer a unique opportunity to investigate generalization as they allow us to tightly constrain training and evaluation examples. Motivated by Li et al. (2022) and Ruoss et al. (2022), and after noticing that the Knight's tour had never previously been used as a test of generalization, I trained a GPT-2 variant on tens of millions of knight's tour puzzles encoded as linear indices and evaluated whether the model could solve unseen partial puzzles. I found that models are able to generalize, and the extent of generalization increases for models trained on more data. The version of the model trained on ~25 million Knight's tours could solve 999/1191 parberry puzzles.

Code for generating the training examples, model set up, training, and evaluation can be found here: https://github.com/AsteroidHunter/knightsGPT

A faithful replication of AlexNet

June 2024 - August 2024

Independent

Krizhevsky et al. (2012) is a foundational paper that is often credited as having kickstarted the deep learning revolution. The original paper preceded the creation of the PyTorch library, so the authors designed the model, the data processing pipeline, and all associated components from scratch.

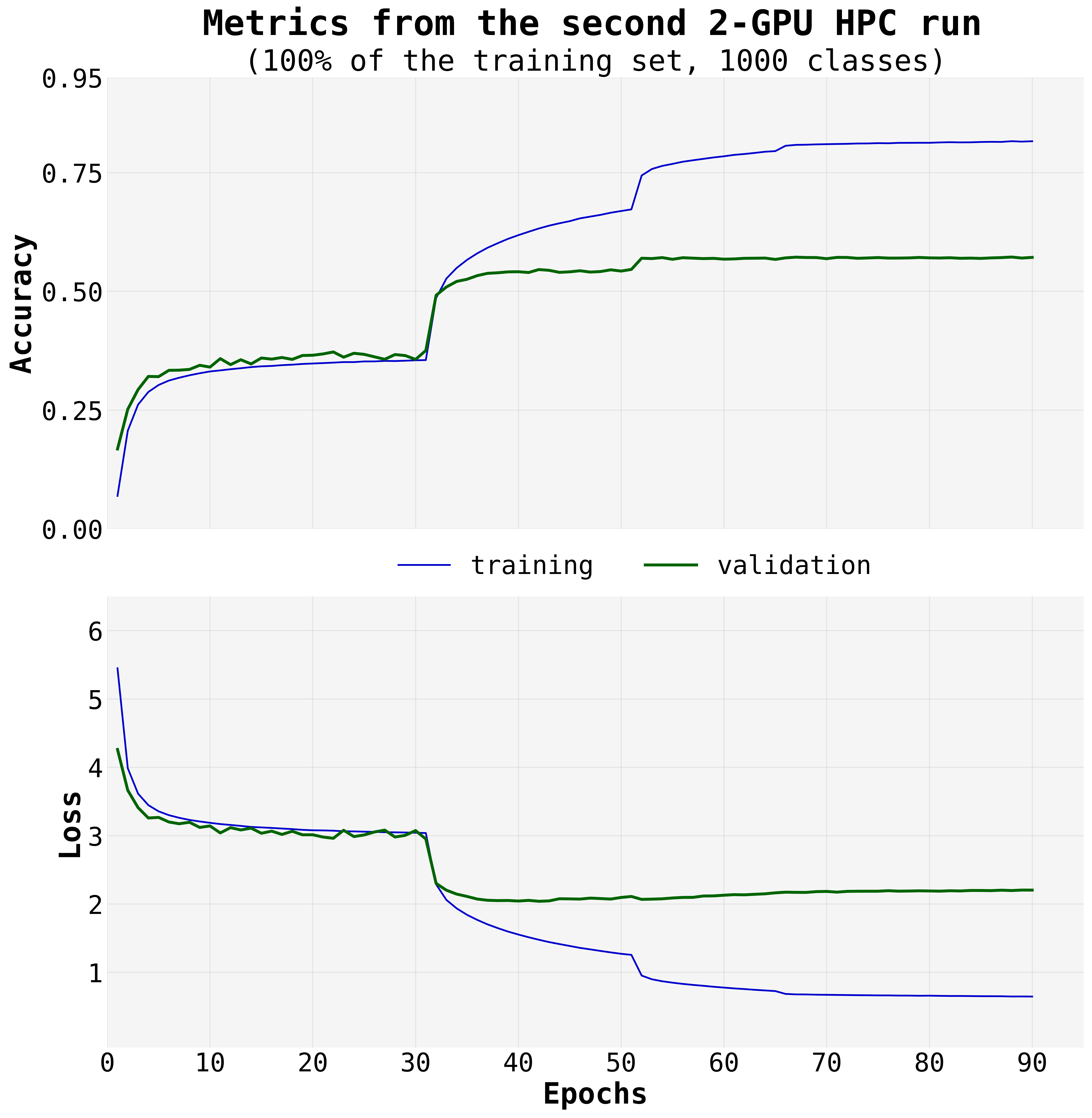

For this project, my goal was gaining more familiarity with research engineering practices in deep learning. I read the paper section-by-section and replicated everything the authors did in jupyter notebooks; LLM usage was limited to inquiries about conceptual questions, debugging, and some idea generation (but not code generation). I wanted the replication to be as faithful as possible, so I used the entire ~150 GB ImageNet 2010 dataset and the 2-GPU split architecture that the authors had used. While other alexnet replications exist, this is the first that employs model sharding the same way the authors originally did. My model's top-1 and top-5 accuracy were within 2.8% of the original paper.

The final code for the data augmentation, model training & evaluation, along with notes on challenges faced can be found here: https://github.com/AsteroidHunter/replicatingAlexNet

shellbert: an LLM-based turtle with a personality

June 2025 - Present

Independent

Shellbert is an LLM with a personality that will serve primarily two functions:

1. Automatically finding and posting impact-relevant jobs and opportunities in Tucson Effective Altruism's discord server.

2. Answering questions related to moral/philosophy, economics, global health, animal welfare, and other topics covered in the UArizona course Pathways to Progress.

To better perform these two core tasks, the model will have the capacity to perform web searches, perform simple agentic tasks, and use tools. Besides serving the two goals above, two core motivations behind this project are practical implementation of alignment and personality engineering, as well as remote model deployment.

Shellbert is currently based on the instruction-tuned versions of the Gemma 3n models. The model is hosted on a personal remote server with four NVIDIA 3060's (although, the bot typically uses just a single GPU).

You can find shellbert here: https://github.com/AsteroidHunter/shellbert

Building a local "high-performance" cluster

February 2025 + Late April and early May 2025

Independent

I did not have enough VRAM to work on projects, so a friend and I started an AI club, submitted a funding proposal for a remote computing cluster, secured a grant from the university, and sourced and assembled a local GPU cluster with 48 gigabytes of VRAM.

I took charge of setting up the software for this computing cluster. I modeled the set up after University of Arizona's high-performance computers, which I had extensively used as an undergraduate and graduate research assistant. More concretely, this involved:

— Setting up Duo 2-factor authentication for all users

— Implementing security measures for preventing brute-force attacks

— Configuring the SLURM workload manager for job scheduling

— Installing NVIDIA/CUDA drivers and uv for python management

— And general maintenane and debugging as and when problems arise

Size & Albedo distribution of Near-Earth Asteroids observed by NEOWISE

December 2022 - December 2023

Graduate Research Assistant, NEOWISE/NEOS

Summary: We used NEATM (Harris, 1998) and a nonlinear optimization pipeline for modeling and physically characterizing thermally-dominated observations of ~2200 Near-Earth Asteroids (NEAs). This work was done when I was a part of the NEO Surveyor science team, and the results of this investigation were presented at the Planetary Defense Conference (2023).

My three core contributions to this project:

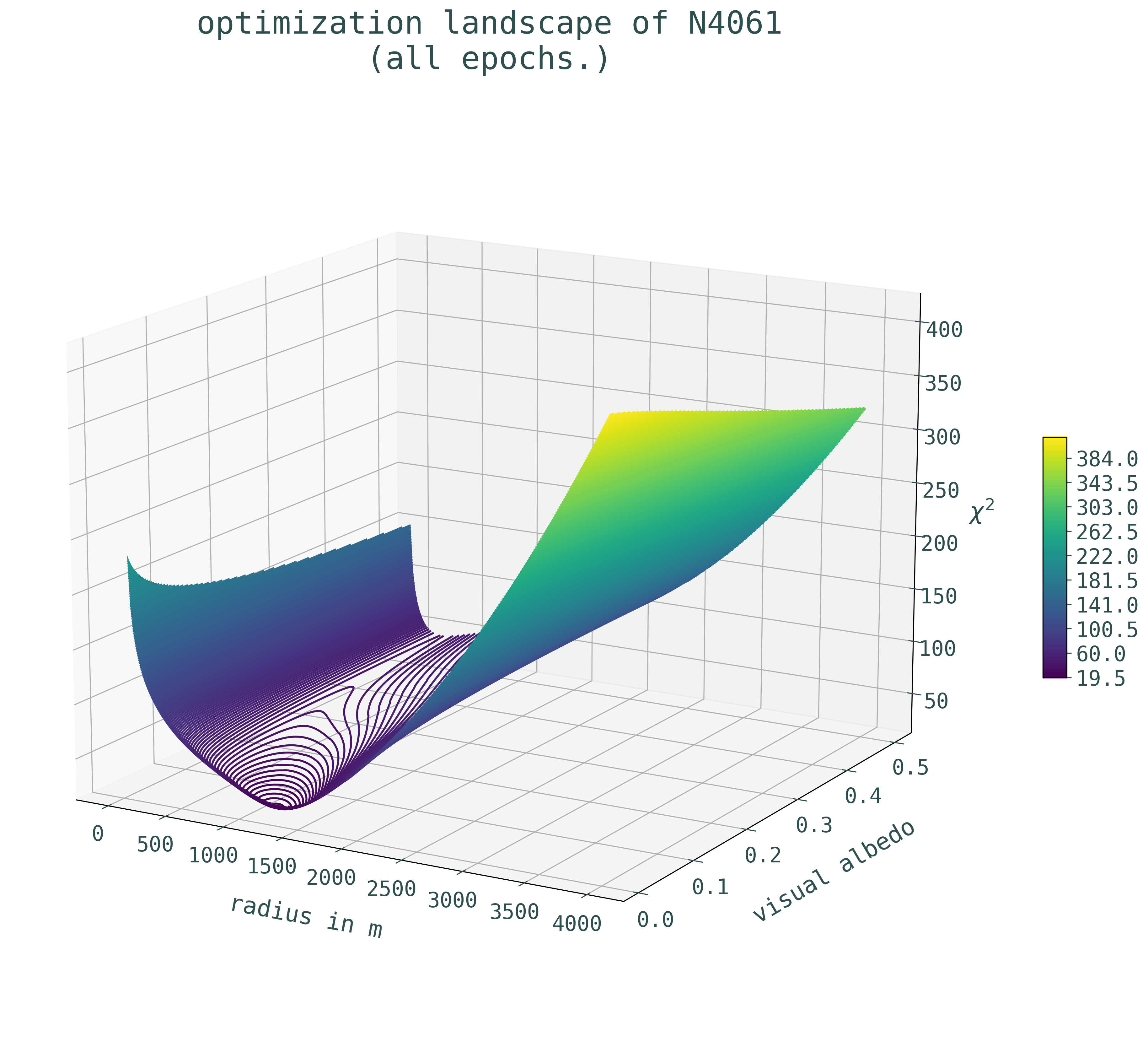

1. Determining the ideal optimization algorithm: I crafted plots and analytical functions to explore the convexity vs. nonconvexity of the loss landscape, independently read about and implemented various nonlinear optimization algorithms from the Scipy and NLOpt libraries, and compared the execution speed and accuracy of the functions implemented. Besides finding the optimal optimization algorithm for the thermal modeling pipeline, a secondary motivation here was testing if the standard errors of the fits could be retrieved from the hessian; while second-order optimization methods are slower, if the standard errors could be retrieved, it would overall reduce modeling time as it is cheaper than running n monte carlo simulations.

2. Pipeline development & validation: I helped parallelize the thermal modeling pipeline using python's multiprocessing package. The other major task was validating the reliability of the pipeline, for which, I created a script that could generate synthetic asteroids using characteristics found in real-world observational data as the prior, and then tested if the results from the new pipeline were close to true diameters and albedos of the synthetic objects.

3. Characterization and analysis of 2200 NEAs: After the pipeline had been sufficiently developed and validated, I ran the algorithm on all the NEA observational data we had, inspected the validity of the fits (and re-fit bad fits using more appropriate parameters), and presented the final results in the form of a talk at the Planetary Defense Conference in Vienna.

Tucson Effective Altruism (TEA)

May 2022 - July 2025

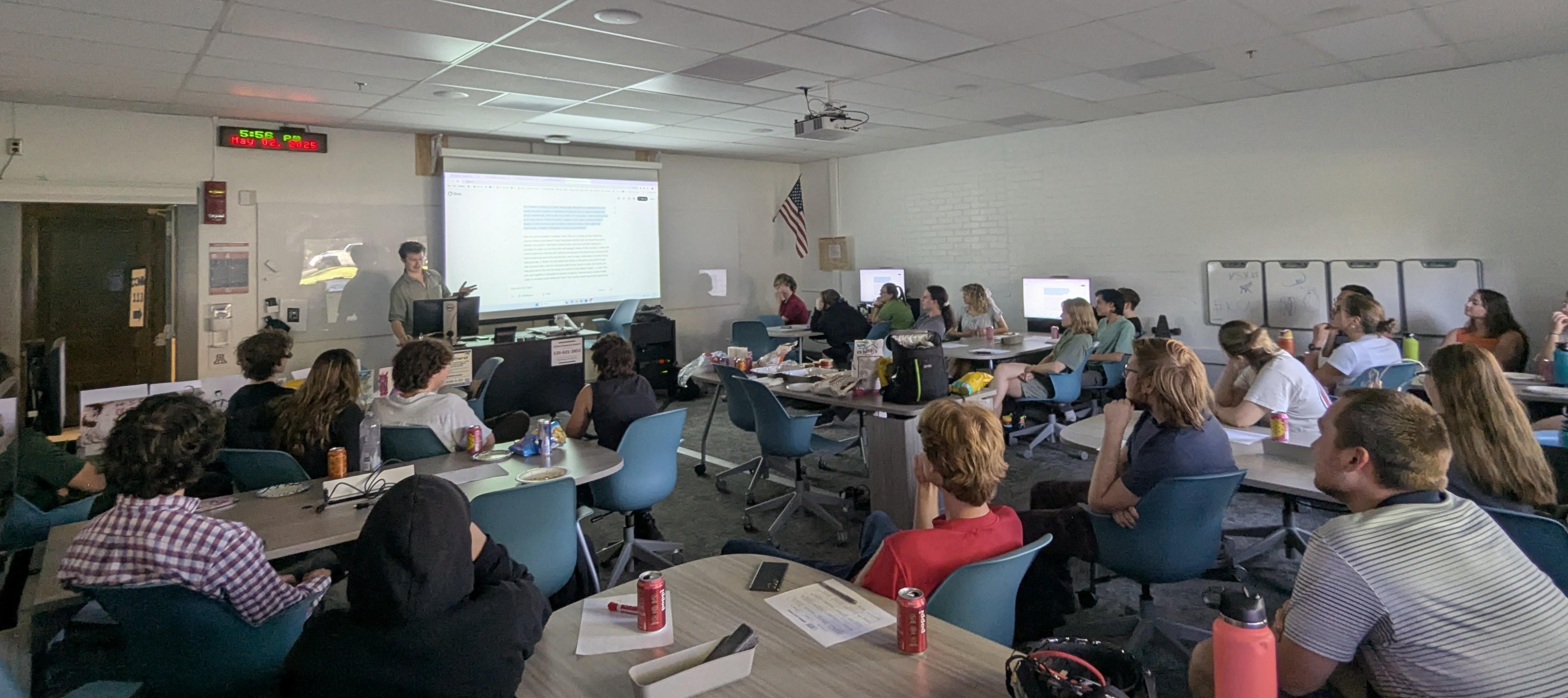

Tucson Effective Altruism is an organization I co-founded and scaled at the University of Arizona. This is the most entrepreneurial experience I have had so far. We started quite scrappy: two people running small discussion groups. By the end of year three, we had ten organizers in the team collectively dedicating upwards of 35-40 hours each week, and our regular operations included running a university course, a career planning program, hosting research-styled symposia, and weekly community socials.

Here is the list from LinkedIn and my resume describing the many hats I wore:

— Spearheaded all core operations such as running weekly meetings, delegating tasks to a 10-person team, leading outreach initiatives, course logistics, and optimal management of a ~$3K annual budget

— Negotiated with the philosophy department leadership to convert our pilot 11-week discussion program into an accredited University of Arizona course, with 75+ participants over three years

— Drafted and improved the course's syllabus and readings, mediated weekly discussions, and managed teaching assistantship duties such as grading and office hours

— Amplified membership and awareness by delivering dozens of in-class pitches (with 100-600 attendees), hosting tabling events, and executing targeted email and social-media campaigns

— Doubled our Fall '24 & Spring '25 early-semester email list sign-ups from 75 to 160+ per semester through A/B-tested messaging and improved in-person outreach

— Leveraged Mailchimp, Qualtrics, Substack, CampusGroup's web-hosting platform, and D2L to automate email campaigns, process applications, disperse newsletters, and host course content

— Hosted catered symposia exploring topics within moral philosophy, AI safety, global health, career planning, and cause prioritization, attracting 25+ attendees

— Developed and iterated a writing-focused career development program, and offered 1-on-1 career guidance to students

— Conducted post-mortems and targeted interviews after each term to improve organizational efficiency and member satisfaction

Physical characterization of the potentially hazardous (99942) Apophis

January 2021 - April 2022

Undergraduate Research Assistant, NEOWISE

Summary: We used a scalar optimization algorithm built around NEATM (Harris, 1998) and a markov chain monte carlo algorithm using a thermophysical Model (Wright, 2007) to physically characterize the near-Earth asteroid (99942) Apophis. Our computed effective diameter, albedo, and thermal inertia of the object were in agreement with previous studies on the object, and the final results were published as a first-author paper in the Planetary Science journal. Due to the importance of the object as a potentially hazardous asteroid and since this work was a part of larger planetary defense exercise, the paper also received some some media coverage.